T007 · Ligand-based screening: machine learning¶

Note: This talktorial is a part of TeachOpenCADD, a platform that aims to teach domain-specific skills and to provide pipeline templates as starting points for research projects.

Authors:

Jan Philipp Albrecht, CADD seminar 2018, Charité/FU Berlin

Jacob Gora, CADD seminar 2018, Charité/FU Berlin

Talia B. Kimber, 2019-2020, Volkamer lab

Andrea Volkamer, 2019-2020, Volkamer lab

Talktorial T007: This talktorial is part of the TeachOpenCADD pipeline described in the first TeachOpenCADD paper, comprising of talktorials T001-T010.

Aim of this talktorial¶

Due to larger available data sources, machine learning (ML) gained momentum in drug discovery and especially in ligand-based virtual screening. In this talktorial, we learn how to use different supervised ML algorithms to predict the activity of novel compounds against our target of interest (EGFR).

Contents in Theory¶

Data preparation: Molecule encoding

Machine learning (ML)

Supervised learning

Model validation and evaluation

Validation strategy: K-fold cross-validation

Performance measures

Contents in Practical¶

Load compound and activity data

Data preparation

Data labeling

Molecule encoding

Machine learning

Helper functions

Random forest classifier

Support vector classifier

Neural network classifier

Cross-validation

References¶

“Fingerprints in the RDKit” slides, G. Landrum, RDKit UGM 2012

Extended-connectivity fingerprints (ECFPs): Rogers, David, and Mathew Hahn. “Extended-connectivity fingerprints.” Journal of chemical information and modeling 50.5 (2010): 742-754.

Machine learning (ML):

Random forest (RF): Breiman, L. “Random Forests”. Machine Learning 45, 5–32 (2001).

Support vector machines (SVM): Cortes, C., Vapnik, V. “Support-vector networks”. Machine Learning 20, 273–297 (1995).

Artificial neural networks (ANN): Van Gerven, Marcel, and Sander Bohte. “Artificial neural networks as models of neural information processing.” Frontiers in Computational Neuroscience 11 (2017): 114.

Performance:

See also github notebook by B. Merget from J. Med. Chem., 2017, 60, 474−485

Activity cutoff \(pIC_{50} = 6.3\) used in this talktorial

Profiling Prediction of Kinase Inhibitors: Toward the Virtual Assay J. Med. Chem. (2017), 60, 474-485

Notebook accompanying the publication mentioned before: Notebook

Theory¶

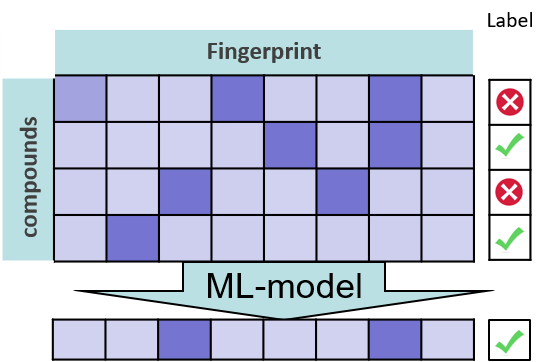

To successfully apply ML, we need a large data set of molecules, a molecular encoding, a label per molecule in the data set, and a ML algorithm to train a model. Then, we can make predictions for new molecules.

Figure 1: Machine learning overview: Molecular encoding, label, ML algorithm, prediction. Figure by Andrea Volkamer.

Data preparation: Molecule encoding¶

For ML, molecules need to be converted into a list of features. Often molecular fingerprints are used as representation.

The fingerprints used in this talktorial as implemented in RDKit (more info can be found in a presentation by G. Landrum) are:

maccs: ‘MACCS keys are 166 bit structural key descriptors in which each bit is associated with a SMARTS pattern.’ (see OpenEye’s

MACCSdocs)Morgan fingerprints (and ECFP): ‘Extended-Connectivity Fingerprints (ECFPs) are circular topological fingerprints designed for molecular characterization, similarity searching, and structure-activity modeling.’ (see ChemAxon’s

ECFPdocs) The original implementation of the ECFPs was done in Pipeline Pilot which is not open-source. Instead we use the implementation from RDKit which is called Morgan fingerprint. The two most important parameters of these fingerprints are the radius and fingerprint length. The first specifies the radius of circular neighborhoods considered for each atom. Here two radii are considered: 2 and 3. The length parameter specifies the length to which the bit string representation is hashed. The default length is 2048.

Machine learning (ML)¶

ML can be applied for (text adapted from scikit-learn page):

Classification (supervised): Identify which category an object belongs to (e.g. : Nearest neighbors, Naive Bayes, RF, SVM, …)

Regression: Prediction of a continuous-values attribute associated with an object

Clustering (unsupervised): Automated grouping of similar objects into sets (see also Talktorial T005)

Supervised learning¶

A learning algorithm creates rules by finding patterns in the training data.

Random Forest (RF): Ensemble of decision trees. A single decision tree splits the features of the input vector in a way that maximizes an objective function. In the random forest algorithm, the trees that are grown are de-correlated because the choice of features for the splits are chosen randomly.

Support Vector Machines (SVMs): SVMs can efficiently perform a non-linear classification using what is called the kernel trick, implicitly mapping their inputs into high-dimensional feature spaces. The classifier is based on the idea of maximizing the margin as the objective function.

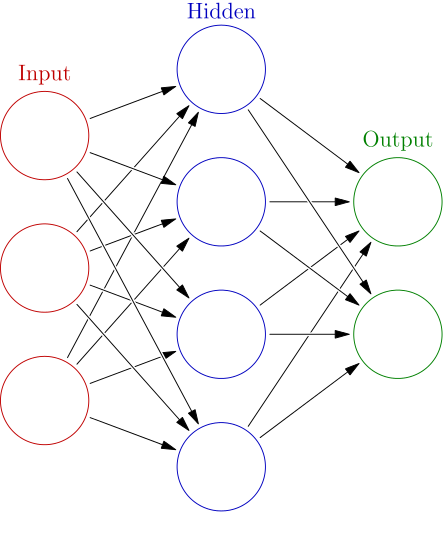

Artificial neural networks (ANNs): An ANN is based on a collection of connected units or nodes called artificial neurons which loosely model the neurons in a biological brain. Each connection, like the synapses in a biological brain, can transmit a signal from one artificial neuron to another. An artificial neuron that receives a signal can process it and then signal additional artificial neurons connected to it.

Figure 2: Example of a neural network with one hidden layer. Figure taken from Wikipedia.

Model validation and evaluation¶

Validation strategy: K-fold cross validation¶

This model validation technique splits the dataset in two groups in an iterative manner:

Training data set: Considered as the known dataset on which the model is trained

Test dataset: Unknown dataset on which the model is then tested

Process is repeated k-times

The goal is to test the ability of the model to predict data which it has never seen before in order to flag problems known as over-fitting and to assess the generalization ability of the model.

Performance measures¶

Sensitivity, also true positive rate

TPR = TP/(FN + TP)

Intuitively: Out of all actual positives, how many were predicted as positive?

Specificity, also true negative rate

TNR = TN/(FP + TN)

Intuitively: Out of all actual negatives, how many were predicted as negative?

Accuracy, also the trueness

ACC = (TP + TN)/(TP + TN + FP + FN)

Intuitively: Proportion of correct predictions.

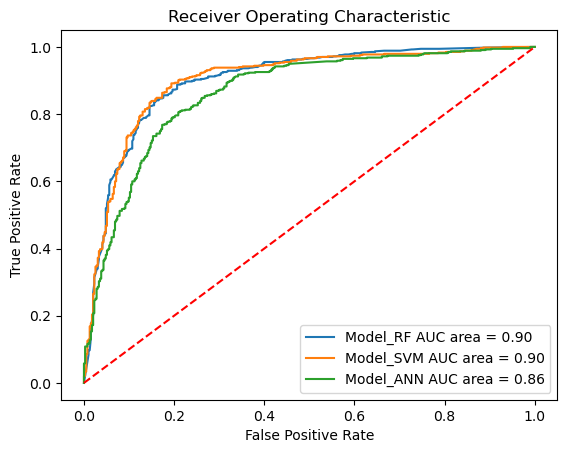

ROC-curve, receiver operating characteristic curve

A graphical plot that illustrates the diagnostic ability of our classifier

Plots the sensitivity against the specificity

AUC, the area under the ROC curve (AUC):

Describes the probability that a classifier will rank a randomly chosen positive instance higher than a negative one

Values between 0 and 1, the higher the better

What the model predicts |

True active |

True inactive |

|---|---|---|

active |

True Positive (TP) |

False Positive (FP) |

inactive |

False Negative (FN) |

True Negative (TN) |

Practical¶

[1]:

from pathlib import Path

from warnings import filterwarnings

import time

import pandas as pd

import numpy as np

from sklearn import svm, metrics, clone

from sklearn.ensemble import RandomForestClassifier

from sklearn.neural_network import MLPClassifier

from sklearn.model_selection import KFold, train_test_split

from sklearn.metrics import auc, accuracy_score, recall_score

from sklearn.metrics import roc_curve, roc_auc_score

import matplotlib.pyplot as plt

from rdkit import Chem

from rdkit.Chem import MACCSkeys, rdFingerprintGenerator

from teachopencadd.utils import seed_everything

# Silence some expected warnings

filterwarnings("ignore")

# Fix seed for reproducible results

SEED = 22

seed_everything(SEED)

[2]:

# Set path to this notebook

HERE = Path(_dh[-1])

DATA = HERE / "data"

Load compound and activity data¶

Let’s start by loading our data, which focuses on the Epidermal growth factor receptor (EGFR) kinase. The csv file from Talktorial T002 is loaded into a dataframe with the important columns:

CHEMBL-ID

SMILES string of the corresponding compound

Measured affinity: pIC50

[3]:

# Read data from previous talktorials

chembl_df = pd.read_csv(

HERE / "../T002_compound_adme/data/EGFR_compounds_lipinski.csv",

index_col=0,

)

# Look at head

print("Shape of dataframe : ", chembl_df.shape)

chembl_df.head()

# NBVAL_CHECK_OUTPUT

Shape of dataframe : (4635, 10)

[3]:

| molecule_chembl_id | IC50 | units | smiles | pIC50 | molecular_weight | n_hba | n_hbd | logp | ro5_fulfilled | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | CHEMBL63786 | 0.003 | nM | Brc1cccc(Nc2ncnc3cc4ccccc4cc23)c1 | 11.522879 | 349.021459 | 3 | 1 | 5.2891 | True |

| 1 | CHEMBL35820 | 0.006 | nM | CCOc1cc2ncnc(Nc3cccc(Br)c3)c2cc1OCC | 11.221849 | 387.058239 | 5 | 1 | 4.9333 | True |

| 2 | CHEMBL53711 | 0.006 | nM | CN(C)c1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.221849 | 343.043258 | 5 | 1 | 3.5969 | True |

| 3 | CHEMBL66031 | 0.008 | nM | Brc1cccc(Nc2ncnc3cc4[nH]cnc4cc23)c1 | 11.096910 | 339.011957 | 4 | 2 | 4.0122 | True |

| 4 | CHEMBL53753 | 0.008 | nM | CNc1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.096910 | 329.027607 | 5 | 2 | 3.5726 | True |

[4]:

# Keep only the columns we want

chembl_df = chembl_df[["molecule_chembl_id", "smiles", "pIC50"]]

chembl_df.head()

# NBVAL_CHECK_OUTPUT

[4]:

| molecule_chembl_id | smiles | pIC50 | |

|---|---|---|---|

| 0 | CHEMBL63786 | Brc1cccc(Nc2ncnc3cc4ccccc4cc23)c1 | 11.522879 |

| 1 | CHEMBL35820 | CCOc1cc2ncnc(Nc3cccc(Br)c3)c2cc1OCC | 11.221849 |

| 2 | CHEMBL53711 | CN(C)c1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.221849 |

| 3 | CHEMBL66031 | Brc1cccc(Nc2ncnc3cc4[nH]cnc4cc23)c1 | 11.096910 |

| 4 | CHEMBL53753 | CNc1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.096910 |

Data preparation¶

Data labeling¶

We need to classify each compound as active or inactive. Therefore, we use the pIC50 value.

pIC50 = -log10(IC50)

IC50 describes the amount of substance needed to inhibit, in vitro, a process by 50% .

A common cut-off value to discretize pIC50 data is 6.3, which we will use for our experiment (refer to J. Med. Chem. (2017), 60, 474-485 and the corresponding notebook)

Note that there are several other suggestions for an activity cut-off ranging from an pIC50 value of 5 to 7 in the literature or even to define an exclusion range when not to take data points.

[5]:

# Add column for activity

chembl_df["active"] = np.zeros(len(chembl_df))

# Mark every molecule as active with an pIC50 of >= 6.3, 0 otherwise

chembl_df.loc[chembl_df[chembl_df.pIC50 >= 6.3].index, "active"] = 1.0

# NBVAL_CHECK_OUTPUT

print("Number of active compounds:", int(chembl_df.active.sum()))

print("Number of inactive compounds:", len(chembl_df) - int(chembl_df.active.sum()))

Number of active compounds: 2631

Number of inactive compounds: 2004

[6]:

chembl_df.head()

# NBVAL_CHECK_OUTPUT

[6]:

| molecule_chembl_id | smiles | pIC50 | active | |

|---|---|---|---|---|

| 0 | CHEMBL63786 | Brc1cccc(Nc2ncnc3cc4ccccc4cc23)c1 | 11.522879 | 1.0 |

| 1 | CHEMBL35820 | CCOc1cc2ncnc(Nc3cccc(Br)c3)c2cc1OCC | 11.221849 | 1.0 |

| 2 | CHEMBL53711 | CN(C)c1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.221849 | 1.0 |

| 3 | CHEMBL66031 | Brc1cccc(Nc2ncnc3cc4[nH]cnc4cc23)c1 | 11.096910 | 1.0 |

| 4 | CHEMBL53753 | CNc1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.096910 | 1.0 |

Molecule encoding¶

Now we define a function smiles_to_fp to generate fingerprints from SMILES. For now, we incorporated the choice between the following fingerprints:

maccs

morgan2 and morgan3

[7]:

def smiles_to_fp(smiles, method="maccs", n_bits=2048):

"""

Encode a molecule from a SMILES string into a fingerprint.

Parameters

----------

smiles : str

The SMILES string defining the molecule.

method : str

The type of fingerprint to use. Default is MACCS keys.

n_bits : int

The length of the fingerprint.

Returns

-------

array

The fingerprint array.

"""

# convert smiles to RDKit mol object

mol = Chem.MolFromSmiles(smiles)

if method == "maccs":

return np.array(MACCSkeys.GenMACCSKeys(mol))

if method == "morgan2":

fpg = rdFingerprintGenerator.GetMorganGenerator(radius=2, fpSize=n_bits)

return np.array(fpg.GetFingerprint(mol))

if method == "morgan3":

fpg = rdFingerprintGenerator.GetMorganGenerator(radius=3, fpSize=n_bits)

return np.array(fpg.GetFingerprint(mol))

else:

# NBVAL_CHECK_OUTPUT

print(f"Warning: Wrong method specified: {method}. Default will be used instead.")

return np.array(MACCSkeys.GenMACCSKeys(mol))

[8]:

compound_df = chembl_df.copy()

[9]:

# Add column for fingerprint

compound_df["fp"] = compound_df["smiles"].apply(smiles_to_fp)

compound_df.head(3)

# NBVAL_CHECK_OUTPUT

[9]:

| molecule_chembl_id | smiles | pIC50 | active | fp | |

|---|---|---|---|---|---|

| 0 | CHEMBL63786 | Brc1cccc(Nc2ncnc3cc4ccccc4cc23)c1 | 11.522879 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

| 1 | CHEMBL35820 | CCOc1cc2ncnc(Nc3cccc(Br)c3)c2cc1OCC | 11.221849 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

| 2 | CHEMBL53711 | CN(C)c1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.221849 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

Machine Learning (ML)¶

In the following, we will try several ML approaches to classify our molecules. We will use:

Random Forest (RF)

Support Vector Machine (SVM)

Artificial Neural Network (ANN)

Additionally, we will comment on the results.

The goal is to test the ability of the model to predict data which it has never seen before in order to flag problems known as over fitting and to assess the generalization ability of the model.

We start by defining a function model_training_and_validation which fits a model on a random train-test split of the data and returns measures such as accuracy, sensitivity, specificity and AUC evaluated on the test set. We also plot the ROC curves using plot_roc_curves_for_models.

We then define a function named crossvalidation which executes a cross validation procedure and prints the statistics of the results over the folds.

Helper functions¶

Helper function to plot customized ROC curves. Code inspired by stackoverflow.

[10]:

def plot_roc_curves_for_models(models, test_x, test_y, save_png=False):

"""

Helper function to plot customized roc curve.

Parameters

----------

models: dict

Dictionary of pretrained machine learning models.

test_x: list

Molecular fingerprints for test set.

test_y: list

Associated activity labels for test set.

save_png: bool

Save image to disk (default = False)

Returns

-------

fig:

Figure.

"""

fig, ax = plt.subplots()

# Below for loop iterates through your models list

for model in models:

# Select the model

ml_model = model["model"]

# Prediction probability on test set

test_prob = ml_model.predict_proba(test_x)[:, 1]

# Prediction class on test set

test_pred = ml_model.predict(test_x)

# Compute False postive rate and True positive rate

fpr, tpr, thresholds = metrics.roc_curve(test_y, test_prob)

# Calculate Area under the curve to display on the plot

auc = roc_auc_score(test_y, test_prob)

# Plot the computed values

ax.plot(fpr, tpr, label=(f"{model['label']} AUC area = {auc:.2f}"))

# Custom settings for the plot

ax.plot([0, 1], [0, 1], "r--")

ax.set_xlabel("False Positive Rate")

ax.set_ylabel("True Positive Rate")

ax.set_title("Receiver Operating Characteristic")

ax.legend(loc="lower right")

# Save plot

if save_png:

fig.savefig(f"{DATA}/roc_auc", dpi=300, bbox_inches="tight", transparent=True)

return fig

Helper function to calculate model performance.

[11]:

def model_performance(ml_model, test_x, test_y, verbose=True):

"""

Helper function to calculate model performance

Parameters

----------

ml_model: sklearn model object

The machine learning model to train.

test_x: list

Molecular fingerprints for test set.

test_y: list

Associated activity labels for test set.

verbose: bool

Print performance measure (default = True)

Returns

-------

tuple:

Accuracy, sensitivity, specificity, auc on test set.

"""

# Prediction probability on test set

test_prob = ml_model.predict_proba(test_x)[:, 1]

# Prediction class on test set

test_pred = ml_model.predict(test_x)

# Performance of model on test set

accuracy = accuracy_score(test_y, test_pred)

sens = recall_score(test_y, test_pred)

spec = recall_score(test_y, test_pred, pos_label=0)

auc = roc_auc_score(test_y, test_prob)

if verbose:

# Print performance results

# NBVAL_CHECK_OUTPUT print(f"Accuracy: {accuracy:.2}")

print(f"Sensitivity: {sens:.2f}")

print(f"Specificity: {spec:.2f}")

print(f"AUC: {auc:.2f}")

return accuracy, sens, spec, auc

Helper function to fit a machine learning model on a random train-test split of the data and return the performance measures.

[12]:

def model_training_and_validation(ml_model, name, splits, verbose=True):

"""

Fit a machine learning model on a random train-test split of the data

and return the performance measures.

Parameters

----------

ml_model: sklearn model object

The machine learning model to train.

name: str

Name of machine learning algorithm: RF, SVM, ANN

splits: list

List of desciptor and label data: train_x, test_x, train_y, test_y.

verbose: bool

Print performance info (default = True)

Returns

-------

tuple:

Accuracy, sensitivity, specificity, auc on test set.

"""

train_x, test_x, train_y, test_y = splits

# Fit the model

ml_model.fit(train_x, train_y)

# Calculate model performance results

accuracy, sens, spec, auc = model_performance(ml_model, test_x, test_y, verbose)

return accuracy, sens, spec, auc

Preprocessing: Split the data (will be reused for the other models)

[13]:

fingerprint_to_model = compound_df.fp.tolist()

label_to_model = compound_df.active.tolist()

# Split data randomly in train and test set

# note that we use test/train_x for the respective fingerprint splits

# and test/train_y for the respective label splits

(

static_train_x,

static_test_x,

static_train_y,

static_test_y,

) = train_test_split(fingerprint_to_model, label_to_model, test_size=0.2, random_state=SEED)

splits = [static_train_x, static_test_x, static_train_y, static_test_y]

# NBVAL_CHECK_OUTPUT

print("Training data size:", len(static_train_x))

print("Test data size:", len(static_test_x))

Training data size: 3708

Test data size: 927

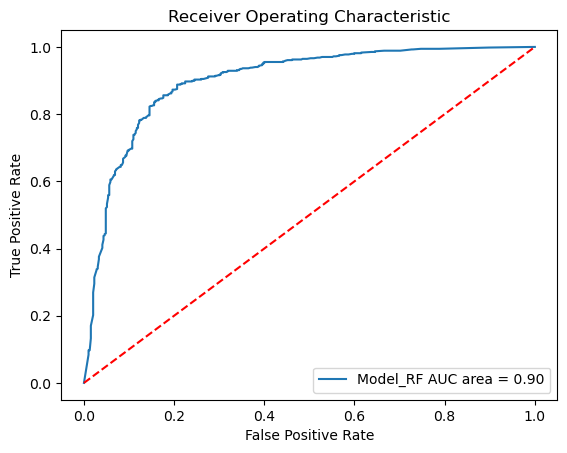

Random forest classifier¶

We start with a random forest classifier, where we first set the parameters.

We train the model on a random train-test split and plot the results.

[14]:

# Set model parameter for random forest

param = {

"n_estimators": 100, # number of trees to grows

"criterion": "entropy", # cost function to be optimized for a split

}

model_RF = RandomForestClassifier(**param)

[15]:

# Fit model on single split

performance_measures = model_training_and_validation(model_RF, "RF", splits)

Sensitivity: 0.90

Specificity: 0.77

AUC: 0.90

[16]:

# Initialize the list that stores all models. First one is RF.

models = [{"label": "Model_RF", "model": model_RF}]

# Plot roc curve

plot_roc_curves_for_models(models, static_test_x, static_test_y);

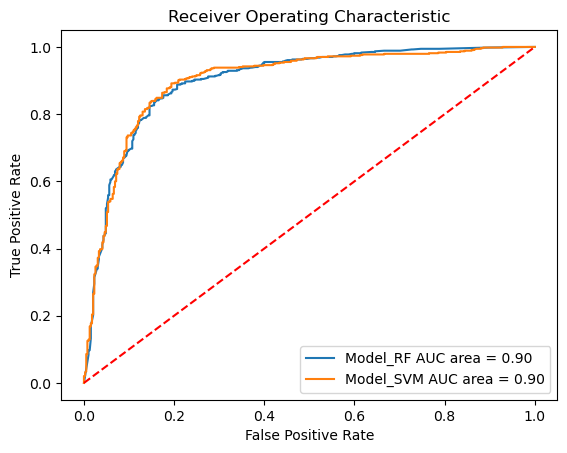

Support vector classifier¶

Here we train a SVM with a radial-basis function kernel (also: squared-exponential kernel). For more information, see sklearn RBF kernel.

[17]:

# Specify model

model_SVM = svm.SVC(kernel="rbf", C=1, gamma=0.1, probability=True)

# Fit model on single split

performance_measures = model_training_and_validation(model_SVM, "SVM", splits)

Sensitivity: 0.92

Specificity: 0.74

AUC: 0.90

[18]:

# Append SVM model

models.append({"label": "Model_SVM", "model": model_SVM})

# Plot roc curve

plot_roc_curves_for_models(models, static_test_x, static_test_y);

Neural network classifier¶

The last approach we try here is a neural network model. We train an MLPClassifier (Multi-layer Perceptron classifier) with 2 layers: the first layer with 5 neurons and the second layer with 3 neurons. As before, we do the crossvalidation procedure and plot the results. For more information on MLP, see sklearn MLPClassifier.

[19]:

# Specify model

model_ANN = MLPClassifier(hidden_layer_sizes=(5, 3), random_state=SEED)

# Fit model on single split

performance_measures = model_training_and_validation(model_ANN, "ANN", splits)

Sensitivity: 0.85

Specificity: 0.73

AUC: 0.86

[20]:

# Append ANN model

models.append({"label": "Model_ANN", "model": model_ANN})

# Plot roc curve

plot_roc_curves_for_models(models, static_test_x, static_test_y, True);

Our models show very good values for all measured values (see AUCs) and thus seem to be predictive.

Cross-validation¶

Next, we will perform cross-validation experiments with the three different models. Therefore, we define a helper function for machine learning model training and validation in a cross-validation loop.

[21]:

def crossvalidation(ml_model, df, n_folds=5, verbose=False):

"""

Machine learning model training and validation in a cross-validation loop.

Parameters

----------

ml_model: sklearn model object

The machine learning model to train.

df: pd.DataFrame

Data set with SMILES and their associated activity labels.

n_folds: int, optional

Number of folds for cross-validation.

verbose: bool, optional

Performance measures are printed.

Returns

-------

None

"""

t0 = time.time()

# Shuffle the indices for the k-fold cross-validation

kf = KFold(n_splits=n_folds, shuffle=True, random_state=SEED)

# Results for each of the cross-validation folds

acc_per_fold = []

sens_per_fold = []

spec_per_fold = []

auc_per_fold = []

# Loop over the folds

for train_index, test_index in kf.split(df):

# clone model -- we want a fresh copy per fold!

fold_model = clone(ml_model)

# Training

# Convert the fingerprint and the label to a list

train_x = df.iloc[train_index].fp.tolist()

train_y = df.iloc[train_index].active.tolist()

# Fit the model

fold_model.fit(train_x, train_y)

# Testing

# Convert the fingerprint and the label to a list

test_x = df.iloc[test_index].fp.tolist()

test_y = df.iloc[test_index].active.tolist()

# Performance for each fold

accuracy, sens, spec, auc = model_performance(fold_model, test_x, test_y, verbose)

# Save results

acc_per_fold.append(accuracy)

sens_per_fold.append(sens)

spec_per_fold.append(spec)

auc_per_fold.append(auc)

# Print statistics of results

print(

f"Mean accuracy: {np.mean(acc_per_fold):.2f} \t"

f"and std : {np.std(acc_per_fold):.2f} \n"

f"Mean sensitivity: {np.mean(sens_per_fold):.2f} \t"

f"and std : {np.std(sens_per_fold):.2f} \n"

f"Mean specificity: {np.mean(spec_per_fold):.2f} \t"

f"and std : {np.std(spec_per_fold):.2f} \n"

f"Mean AUC: {np.mean(auc_per_fold):.2f} \t"

f"and std : {np.std(auc_per_fold):.2f} \n"

f"Time taken : {time.time() - t0:.2f}s\n"

)

return acc_per_fold, sens_per_fold, spec_per_fold, auc_per_fold

Cross-validation

We now apply cross-validation and show the statistics for all three ML models. In real world conditions, cross-validation usually applies 5 or more folds, but for the sake of performance we will reduce it to 3. You can change the value of N_FOLDS in this cell below.

[22]:

N_FOLDS = 3

Note: Next cell takes long to execute

[23]:

for model in models:

print("\n======= ")

print(f"{model['label']}")

crossvalidation(model["model"], compound_df, n_folds=N_FOLDS)

=======

Model_RF

Mean accuracy: 0.83 and std : 0.01

Mean sensitivity: 0.88 and std : 0.02

Mean specificity: 0.76 and std : 0.01

Mean AUC: 0.89 and std : 0.01

Time taken : 1.32s

=======

Model_SVM

Mean accuracy: 0.84 and std : 0.01

Mean sensitivity: 0.90 and std : 0.01

Mean specificity: 0.76 and std : 0.00

Mean AUC: 0.89 and std : 0.01

Time taken : 12.12s

=======

Model_ANN

Mean accuracy: 0.81 and std : 0.00

Mean sensitivity: 0.86 and std : 0.01

Mean specificity: 0.73 and std : 0.01

Mean AUC: 0.87 and std : 0.00

Time taken : 4.31s

We look at the cross-validation performance for molecules encoded using Morgan fingerprint and not MACCS keys.

[24]:

# Reset data frame

compound_df = chembl_df.copy()

[25]:

# Use Morgan fingerprint with radius 3

compound_df["fp"] = compound_df["smiles"].apply(smiles_to_fp, args=("morgan3",))

compound_df.head(3)

# NBVAL_CHECK_OUTPUT

[25]:

| molecule_chembl_id | smiles | pIC50 | active | fp | |

|---|---|---|---|---|---|

| 0 | CHEMBL63786 | Brc1cccc(Nc2ncnc3cc4ccccc4cc23)c1 | 11.522879 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

| 1 | CHEMBL35820 | CCOc1cc2ncnc(Nc3cccc(Br)c3)c2cc1OCC | 11.221849 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

| 2 | CHEMBL53711 | CN(C)c1cc2c(Nc3cccc(Br)c3)ncnc2cn1 | 11.221849 | 1.0 | [0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, ... |

Note: Next cell takes long to execute

[26]:

for model in models:

if model["label"] == "Model_SVM":

# SVM is super slow with long fingerprints

# and will have a performance similar to RF

# We can skip it in this test, but if you want

# to run it, feel free to replace `continue` with `pass`

continue

print("\n=======")

print(model["label"])

crossvalidation(model["model"], compound_df, n_folds=N_FOLDS)

=======

Model_RF

Mean accuracy: 0.86 and std : 0.01

Mean sensitivity: 0.90 and std : 0.01

Mean specificity: 0.81 and std : 0.00

Mean AUC: 0.92 and std : 0.01

Time taken : 5.21s

=======

Model_ANN

Mean accuracy: 0.81 and std : 0.01

Mean sensitivity: 0.84 and std : 0.01

Mean specificity: 0.77 and std : 0.00

Mean AUC: 0.88 and std : 0.01

Time taken : 12.77s

Discussion¶

Which model performed best on our data set and why?

All three models perform (very) well on our dataset. The best models are the random forest and support vector machine models which showed a mean AUC of about 90%. Our neural network showed slightly lower results.

There might be several reasons that random forest and support vector machine models performed best. Our dataset might be easily separable in active/inactive with some simple tree-like decisions or with the radial basis function, respectively. Thus, there is not such a complex pattern in the fingerprints to do this classification.

A cause for the slightly poorer performance of the ANN could be that there was simply too few data to train the model on.

Additionally, it is always advisable to have another external validation set for model evaluation.

Was MACCS the right choice?

Obviously, MACCS was good to start training and validating models to see if a classification is possible.

However, MACCS keys are rather short (166 bit) compared to others (2048 bit), as for example Morgan fingerprint. As shown in the last simulation, having longer fingerprint helps the learning process. All tested models performed slightly better using Morgan fingerprints (see mean AUC increase).

Where can we go from here?¶

We successfully trained several models.

The next step could be to use these models to do a classification with an unknown screening dataset to predict novel potential EGFR inhibitors.

An example for a large screening data set is e.g. MolPort with over 7 million compounds.

Our models could be used to rank the MolPort compounds and then further study those with the highest predicted probability of being active.

For such an application, see also the TDT Tutorial developed by S. Riniker and G. Landrum, where they trained a fusion model to screen eMolecules for new anti-malaria drugs.

Quiz¶

How can you apply ML for virtual screening?

Which machine learning algorithms do you know?

What are necessary prerequisites to successfully apply ML?